Businesses have always been data-driven. The ability to gather data, analyze it, and make decisions based on it has always been a key part of success. As such, the ability to effectively manage data has become critical.

In the past few years, data has exploded in size and complexity. For example, the amount of data created, captured, copied, and consumed worldwide will hit 181 zettabytes by 2025, up from only two zettabytes in 2010.

This fact has made it difficult for businesses to promptly gather, analyze, and act on data. However, DataOps (data operations) is a software framework that was created to address this very problem.

Table of Contents

What is DataOps?

Introduced by IBM’s Lenny Liebmann in June 2014, DataOps is a collection of best practices, techniques, processes, and solutions that applies integrated, process-oriented, and agile software engineering methods to automate, enhance quality, speed, and collaboration while encouraging a culture of continuous improvement in the field of data analytics.

DataOps began as a collection of best practices but has since grown into a novel and autonomous data analytics method. It considers the interrelatedness of the data analytics team and IT operations throughout the data lifecycle, from preparation to reporting.

Also read: 6 Ways Your Business Can Benefit from DataOps

What is the Purpose of DataOps?

DataOps aims to enable data analysts and engineers to work together more effectively to achieve better data-driven decision-making. The ultimate goal of DataOps is to make data analytics more agile, efficient, and collaborative.

To do this, there are three main pillars of DataOps:

- Automation: Automating data processes allows for faster turnaround times and fewer errors.

- Quality: Improving data quality through better governance and standardized processes leads to improved decision-making.

- Collaboration: Effective team collaboration leads to a more data-driven culture and better decision-making.

DataOps Framework

The DataOps framework is composed of four main phases:

- Data preparation involves data cleansing, data transformation, and data enrichment, which is crucial because it ensures the data is ready for analysis.

- Data ingestion handles data collection and storage. Engineers must collect data from various sources before it can be processed and analyzed.

- Data processing is the process of data transformation and data modeling to transform raw data into usable information.

- Data analysis and reporting helps businesses make better decisions by analyzing data to generate insights into trends, patterns, and relationships and reporting the results.

DataOps tools operate as command centers for DataOps. These solutions manage people, processes, and technology to provide a reliable data pipeline to customers.

In addition, these tools are primarily used by analytics and data teams across different functional areas and multiple verticals to unify all data-related development and operation processes within an enterprise.

When choosing a DataOps tool or software, businesses should consider the following features:

- Collaboration between data providers and consumers can guarantee data fluidity.

- It can act as an end-to-end solution by combining different data management practices within a single platform.

- It can automate end-to-end data workflows across the data integration lifecycle.

- Dashboard and visualization tools are available to help stakeholders analyze and collaborate on data.

- It can be deployed in any cloud environment.

Also read: How to Turn Your Business Data into Stories that Sell

5 Best DataOps Tools and Software

The following are five of the best DataOps tools and software.

Census

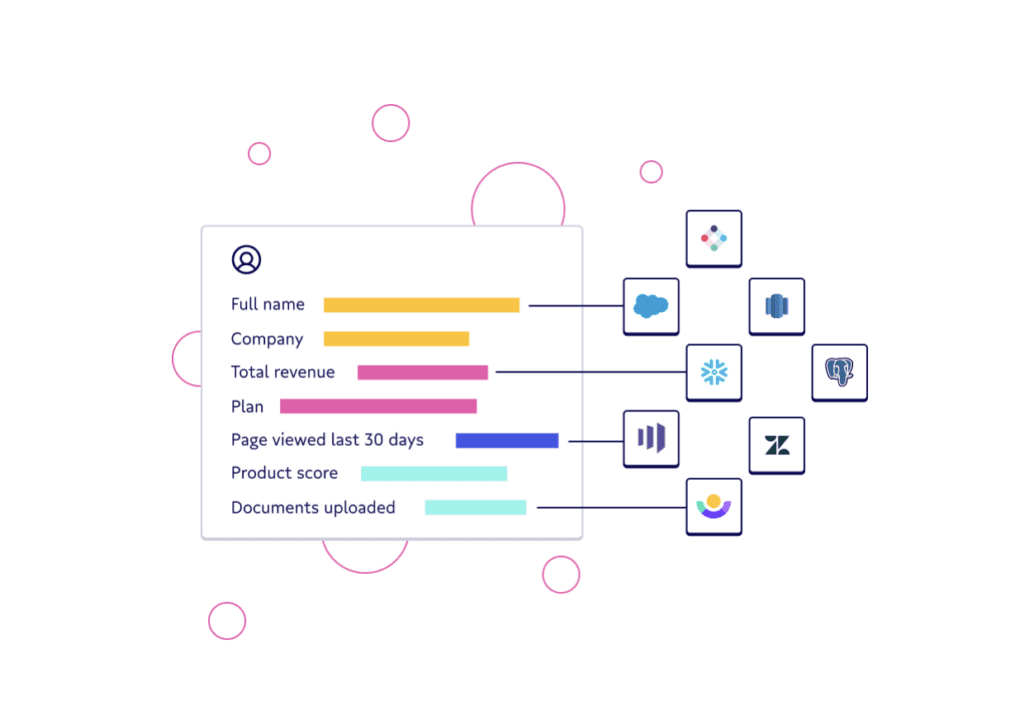

Census is the leading platform for operational analytics with reverse ETL (extract, transform, load), offering a single, trusted location to bring your warehouse data into your daily applications.

It sits on top of your existing warehouse and connects the data from all of your go-to-market tools, allowing everyone in your company to act on good information without requiring any custom scripts or favors from IT.

Over 50 million users receive personalized marketing thanks to Census clients’ performance improvements, including a 10x sales productivity increase due to a support time reduction of up to 98%.

In addition, many modern organizations choose Census for its security, performance, and dependability.

Key Features

- Work With Your Existing Warehouse: Because Census operates on top of your current warehouse, you can retain all your data in one location without the need to migrate to another database.

- No-Code Business Models: With the simple interface, you can build data models without writing code, allowing you to focus on your business instead of worrying about data engineering.

- Works at Scale: Census is built to handle data warehouses with billions of rows and hundreds of columns.

- Build Once, Reuse Everywhere: After you create a data model, you can use it in any tool connected to your warehouse. This means that you can build models once and use them in multiple places without having to recreate them.

- No CSV Files and Python Scripts: There is no need to export data to CSV files or write Python scripts. Census has a simple interface that allows you to build data models to integrate into sales and marketing tools without writing code.

- Fast Sync With Incremental Batch Updates: Census synchronizes data in real time, so you can always have the most up-to-date data. Incremental updates mean that you never have to wait for a complete data refresh.

- Multiple Integrations: Census integrates with all of the leading sales, marketing, collaboration, and communications tools you already use. These include Salesforce, Slack, Marketo, Google Sheets, Snowflake, MySQL, and more.

Pros

- It is easy to set up and sync a data pipeline.

- Census offers responsive and helpful support.

- The solution reduces engineering time to create a sync from your data warehouse to third-party services.

Cons

- Many integrations are still in active development and are buggy to use.

Pricing

Census has three pricing tiers:

- Free: This tier only includes 10 destination fields but is ideal for testing the tool’s features.

- Growth: At $300 per month, Growth includes 40 destination fields as well as a free trial.

- Business: At $800 per month, Business includes 100 destination fields and a free demo.

- Platform: This is a custom solution for enterprises that would like more than 100 destination fields, multiple connections, and other bespoke features.

Mozart Data

Mozart Data is a simple out-of-the-box data stack that can help you consolidate, arrange, and get your data ready for analysis without requiring any technical expertise.

With only a few clicks, SQL commands, and a couple of hours, you can make your unstructured, siloed, and cluttered data of any size and complexity analysis-ready. In addition, Mozart Data provides a web-based interface for data scientists to work with data in various formats, including CSV, JSON, and SQL.

Moreover, Mozart Data is easy to set up and use. It integrates with various data sources, including Amazon SNS, Apache Kafka, MongoDB, and Cassandra. In addition, Mozart Data provides a flexible data modeling layer that allows data scientists to work with data in various ways.

Key Features

- Over 300 Connectors: Mozart Data has over 300 data connectors that make it easy to get data from various data sources into Mozart Data without hiring a data engineer. You can also add custom connectors.

- No Coding or Arcane Syntax: With Mozart Data, there is no need to learn any coding or arcane syntax. All you need to do is point and click to get your data into the platform.

- One-Click Transform Scheduling and Snapshotting: Mozart Data allows you to schedule data transformations with a single click. You can also snapshot your data to roll back to a previous version if needed.

- Sync Your Favorite Business Intelligence (BI) Tools: Mozart Data integrates with most leading BI tools, including Tableau, Looker, and Power BI.

Pros

- The solution is easy to use and requires little technical expertise.

- It offers a wide variety of data connectors, including custom connectors.

- Users can schedule data transformations with a single click.

- Mozart Data has straightforward integrations with popular vendors such as Salesforce, Stripe, Postgres, and Amplitude.

- A Google Sheets sync is available.

- Mozart Data provides good customer support.

Cons

- Non-native integrations require some custom SQL work.

- The SQL editor is a bit clunky.

Pricing

Mozart data has three pricing tiers starting at $1,000 per month plus a $1,000 setup fee. All plans come with a free 14-day trial.

Databricks Lakehouse Platform

Databricks Lakehouse Platform is a comprehensive data management platform that unifies data warehousing and artificial intelligence (AI) use cases on a single platform via a web-based interface, command-line interface, and an SDK (software development kit).

It includes five modules: Delta Lake, Data Engineering, Machine Learning, Data Science, and SQL Analytics. Further, the Data Engineering module enables data scientists, data engineers, and business analysts to collaborate on data projects in a single workspace.

The platform also automates the process of creating and maintaining pipelines and executing ETL operations directly on a data lake, allowing data engineers to focus on quality and reliability to produce valuable insights.

Key Features

- Streamlined Data Ingestion: When new files arrive, they are handled incrementally within regular or continuous jobs. You may process new files in scheduled or ongoing processes without keeping track of state information. With no requirement for listing new files in a directory, you can track them efficiently (with the option to scale to billions of files) without listing them in a directory. Databricks infers and evolves the schema from source data as it loads into the Delta Lake.

- Automated Data Transformation and Processing: Databricks provides an end-to-end solution for data preparation, including data quality checking, cleansing, and enrichment.

- Build Reliability and Quality Into Your Data Pipelines: With Databricks, you can easily monitor your data pipelines to identify issues early on and set up alerts to notify you immediately when there is a problem. In addition, the platform allows you to version-control your pipelines, so you can roll back to a previous version if necessary.

- Efficiently Orchestrate Pipelines: With the Databricks Workflow, you can easily orchestrate and schedule data pipelines. In addition, Workflow makes it easy to chain together multiple jobs to create a data pipeline.

- Seamless Collaborations: When data has been ingested and processed, data engineers may unlock its value by allowing every employee in the company to access and collaborate on data in real time. Data engineers can use this tool to view and analyze data. In addition, they can share datasets, forecasts, models, and notebooks while also ensuring a single consistent source of truth to ensure consistency and reliability across all workloads.

Pros

- Databricks Lakehouse Platform is easy to use and set up.

- It is a unified data management platform that includes data warehousing, ETL, and machine learning.

- End-to-end data preparation with data quality checking, cleansing, and enrichment is available.

- It is built on open source and open standards, which improves flexibility.

- The platform offers good customer support.

Cons

- The pricing structure is complex.

Pricing

Databricks Lakehouse Platform costs vary depending on your compute usage, cloud service provider, and geographical location. However, if you use your own cloud, you get a 14-day free trial from Databricks, and a lightweight free trial is also available through Databricks.

Datafold

As a data observability platform, Datafold helps businesses prevent data catastrophes. It has the unique capacity to detect, evaluate, and investigate data quality concerns before they impact productivity.

Datafold offers the ability to monitor data in real time to identify issues quickly and prevent them from becoming data catastrophes. It combines machine learning with AI to provide analytics with real-time insights, allowing data scientists to make top-quality predictions from large amounts of data.

Key Features

- One-Click Regression Testing for ETL: You can go from 0–100% test coverage of your data pipelines in a few hours. With automated regression testing across billions of rows, you can also see the impact of each code change.

- Data flow Visibility Across all Pipelines and BI Reports: Datafold makes it easy to see how data flows through your entire organization. By tracking data lineage, you can quickly identify issues and fix them before they cause problems downstream.

- SQL Query Conversion: With Datafold’s query conversion feature, you can take any SQL query and turn it into a data quality alert. This way, you can proactively monitor your data for issues and prevent them from becoming problems.

- Data Discovery: Datafold’s data discovery feature helps you understand your data to draw insights from it more easily. You can explore datasets, visualize data flows, and find hidden patterns with a few clicks.

- Multiple Integrations: Datafold integrates with all major data warehouses and frameworks such as Airflow, Databricks, dbt, Google Big Query, Snowflake, Amazon Redshift, and more.

Pros

- Datafold offers simple and intuitive UI and navigation with powerful features.

- The platform allows deep exploration of how tables and data assets relate.

- The visualizations are easy to understand.

- Data quality monitoring is flexible.

- Customer support is responsive.

Cons

- The integrations they support are relatively limited.

- The basic alerts functionality could benefit from more granular controls and destinations.

Pricing

Datafold offers two product tiers, Cloud and Enterprise, with pricing dependent on your data stack and integration complexity. Those interested in Datafold will need to book a call to obtain pricing information.

dbt

dbt is a transformation workflow that allows organizations to deploy analytics code in a short time frame via software engineering best practices such as modularity, portability, CI/CD (continuous integration and continuous delivery), and documentation.

dbt Core is an open-source command-line tool allowing anyone with a working knowledge of SQL to create high-quality data pipelines.

Key Features

- Simple SQL SELECT Statements: dbt uses simple SQL SELECT statements to define data models, which makes it easy for data analysts and data engineers to get started with dbt without learning a new language.

- Pre-Packaged and Custom Testing: dbt comes with pre-packaged tests for data quality, duplication, validity, and more. Additionally, users can create their own custom tests.

- In-App Scheduling, Logging, and Alerting: dbt has an inbuilt scheduler you can use to schedule data pipelines. Additionally, dbt automatically logs all data pipeline runs and generates alerts if there are any issues.

- Version Control and CI/CD: dbt integrates with Git to easily version and deploy data pipelines using CI/CD tools such as Jenkins and CircleCI.

- Multiple Adapters: It connects to and executes SQL against your database, warehouse, platform, or query engine by using a dedicated adapter for each technology. Most adapters are open source and free to use, just like dbt.

Pros

- dbt offers simple SQL syntax.

- Pre-packaged tests and alerts are available.

- The platform integrates with Git for easy deployment.

Cons

- The command-line tool can be challenging for data analysts who are not familiar with SQL.

Pricing

dbt offers three pricing plans:

- Developer: This is a free plan available for a single seat.

- Team: $50 per developer seat per month plus 50 read-only seats. This plan includes a 14-day free trial.

- Enterprise: Custom pricing based on the required features. Prospective customers can request a free demo.

Choosing DataOps Tools

Choosing a DataOps tool depends on your needs and preferences. But, as with anything else in technology, it’s essential to do your research and take advantage of free demos and trials before settling on something.

With plenty of great DataOps tools available on the market today, you’re sure to find one that fits your team’s needs and your budget.

Read next: Top Data Quality Tools & Software 2022