Nijeer Parks was accused of shoplifting and attempting to hit an officer with his car—despite the fact that he was 30 miles away when the crime took place. So far, he’s the third Black man to have been arrested because of an incorrect facial recognition match.

Facial recognition technology is powered by artificial intelligence (AI), which typically suffers from bias, whether from the biases of those who programmed it or just the data samples they used.

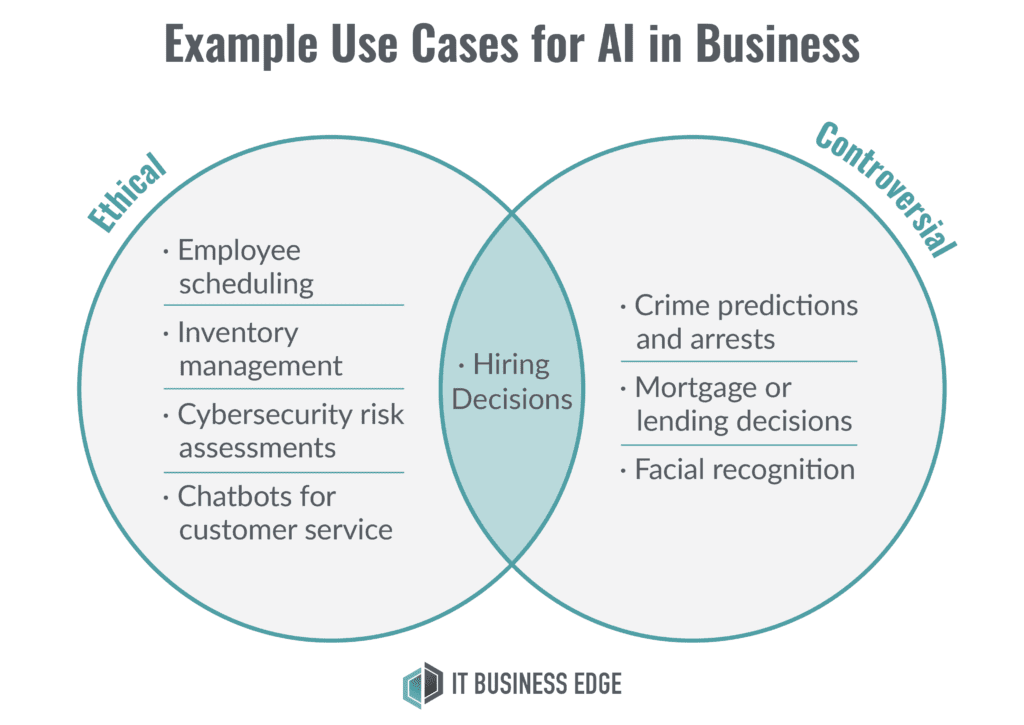

AI is commonplace in today’s business world, with 93% of businesses already using it despite the issues it presents. AI is great for tasks like sales forecasting and inventory management, but problems arise when it is applied to people in uses like hiring or predictive analytics. It’s not AI that’s the enemy here, however; it’s bias. And there are ways that AI can even help correct biases if organizations use it correctly.

Here we’ll outline some of the biases that can be inherent in AI applications and offer some ways that results can be improved.

Examining AI bias

- Facial recognition error rates

- Using historical data to inform future decisions

- Crime predictions

- Hiring and discriminatory language

- AI isn’t always controversial

Facial Recognition Error Rates

AI isn’t always as accurate as developers would like it to be. For example, when using facial recognition software, the maximum error rate for identifying light-skinned men is only 0.8%, while the error rate for dark-skinned women can be as high as 34.7%. This means that approximately one in three black women will be misidentified, while only one in 100 white men will be.

Evident ID is an example of a company that offers facial recognition-enabled background checks by comparing applicant-provided images against online databases and government documents. These background checks are in theory a good idea for ensuring people are who they claim to be by comparing their photos to government IDs. However, they can be risky because glasses, scars, and even makeup can skew the results.

That discrepancy likely comes from the model’s training. Developers may train AI facial recognition software with more photos of people who are light-skinned than they do dark-skinned and more men than women, automatically making the platform better at identifying light-skinned men.

The more training AI gets with minority groups, the better it will be at making accurate judgments. Mike O’Malley, SVP of SenecaGlobal said, “Data scientists, who are the ones that write and tune and evaluate the algorithms, need to be well informed and need to be in place to oversee all of this, otherwise it’s just a computer algorithm run amok. It’s going to optimize to whatever it’s been trained to do, but you may have a lot of unintended consequences [without oversight].”

Using Historical Data to Inform Future Decisions

The bias found in AI is a reflection of the society that has created it. Many types of AI used in business applications use historical data to inform their future decisions. This isn’t bad on the surface, but when making people-related decisions, the data often adds bias. Examples include a system automatically rejecting a rental application for a black family in a majority white neighborhood, or a healthcare system prioritizing the needs of white patients over those of black patients.

Past bias informs future bias, and organizations can’t move forward until they address it.

For hiring decisions, this might lead it to favor men over women or white candidates over minorities because that’s who the company has hired in the past. Companies especially need to be cognizant of this if they’ve been around a long time—more than 50 or 60 years. Past bias informs future bias, and organizations can’t move forward until they address it.

Top tech companies stumble

Even the world’s leading tech companies aren’t immune to the bias found in AI. Google, for example, fired two of its top AI ethics researchers in early 2021 who were looking at some of the downsides of critical Google search products. Several other employees quit following the dismissals, and a handful of academics have backed out of Google-funded projects while others have agreed not to accept funding from the company in the future. David Baker, former director of Google’s Trust & Safety Engineering group, said, “I think it definitely calls into question whether Google can be trusted to honestly question the ethical applications of its technology.”

Google isn’t the only major tech company having issues. Twitter also made major adjustments to its automated picture-cropping algorithm earlier this year because the company found it was favoring white faces over those of people of color. The tests Twitter conducted before releasing the algorithm were based on a much smaller sample size than the analysis performed later. Now, the company plans to stop using the saliency algorithm altogether.

Minorities and lending institutions

Some banks use predictive analytics to determine whether they should grant mortgages to people. However, minority groups often have less information in their credit histories, so they’re at a disadvantage when it comes to getting a mortgage loan or even approval on a rental application. Because of this, predictive models used for granting or rejecting loans are not accurate for minorities, according to a study by economists Laura Blattner and Scott Nelson.

Blattner and Nelson analyzed the amount of “noise” on credit reports—data that institutions can’t use to make an accurate assessment. The [risk score] itself, for example, could be an overestimate or underestimate of the actual risk that an applicant poses, meaning the problem isn’t in the algorithm itself but in the data informing it.

“It’s a self-perpetuating cycle,” Blattner said. “We give the wrong people loans and a chunk of the population never gets the chance to build up the data needed to give them a loan in the future.”

“We give the wrong people loans and a chunk of the population never gets the chance to build up the data needed to give them a loan in the future.”

Laura Blattner, economist

In order to solve the problem of historical data use in AI, there has to be oversight. O’Malley said, “AI shows you what’s in the data, and it helps you mine insights. AI isn’t going to tell you whether that’s good or bad or whether you should do it.”

Crime Predictions

Correctional Offender Management Profiling for Alternative Sanctions, also known as COMPAS, is an AI algorithm that the U.S. court system uses to predict how likely it is that a defendant will commit a similar offense if they are released. However, it’s inaccurate, with black defendants likely to be misclassified 45% of the time, while white defendants may only be misclassified 23% of the time.

And PredPol has similar issues. This software attempts to predict where crime is most likely to happen, which may lead to higher policing in low-income communities and those with a large population of people of color, regardless of their actual crime rates.

While AI can provide valuable leads to understaffed police departments, these leads have to be taken with a grain of salt. There has to be a human evaluating the matches and deciding whether or not the output from these types of software falls in line with what they already know about the case. And if there’s not already a high crime rate in an area, police don’t need to be dispatched to the community just because it’s primarily made up of people of color.

More policing is likely to lead to a higher arrest rate, whether or not the actual crime rate is higher. To better train predictive policing algorithms, analysts should limit the historical data they use and specifically exclude arrest records before and during the Civil Rights Movement. Because there were more laws targeting people of color during this period, they were likely arrested and charged for crimes more, skewing the data and adding bias to present-day systems. Additionally, these systems should prioritize violent crimes over property crimes and target policing based on actual reported crimes versus arrests.

Hiring and Discriminatory Language

Often unintentionally, managers can bring their own biases into hiring decisions. Race, gender, and even college affiliation can affect a hiring manager’s feelings about a candidate before they’ve even interviewed them. AI-enabled hiring platforms, like Xor or HireVue, can hide these categories from a résumé and evaluate candidates purely on skills and experience.

“When used properly, AI can help to reduce hiring bias by creating a more accessible job application process and completely blind screening and scheduling process,” said Birch Faber, marketing VP for XOR. “Companies can also create an automated screening and scheduling process using AI so that factors like a candidate’s name, accent, or origin don’t disqualify a candidate early in the hiring process.

“Every candidate gets asked the same questions and is scored by the AI in the same exact fashion.”

Companies often include language in their job descriptions that may be unnecessarily gendered or otherwise discriminatory. For example, terms like “one of the guys” or “he” often deter female candidates from applying, while requirements involving the ability to lift a certain amount rule out disabled candidates and aren’t necessary for many of the jobs they appear on.

Text analysis tools use AI to examine job descriptions for gender bias and weak language. This will not only help organizations ensure they’re reaching a diverse audience but also help them strengthen their job descriptions to find the right candidates. Gender Decoder is a free tool that examines text for gendered language. Companies can input their job descriptions to make sure they’re not unintentionally driving applicants away.

Also read: Why the Tech Industry Struggles with DE&I

AI Isn’t Always Controversial

AI does have many uses that don’t have the same risk of bias as others. Typically, business decisions make better use cases than people decisions.

Employee scheduling

The average manager spends 140 hours per year making shift schedules for manufacturing, retail, and service industry jobs. For an eight-hour workday, that’s almost 18 days that they could be spending to improve the business instead. AI scheduling programs can automate this process based on skill sets to take work off the manager’s plate. They can also forecast likely sales, telling businesses how many people they’ll need on staff each day.

Handling inventory management

Many businesses use AI to keep track of their inventory and reorder more when stock drops below a certain level. Using historical data and current market trends, AI can estimate how many of each product a business will sell, ensuring they have the right materials on hand. Additionally, the business can use these estimates to avoid overstocking, which can lower its overhead costs. Depending on the amount of inventory they keep, businesses can save between $6,000 and $72,000 by adding AI to their inventory management system.

Other examples include cybersecurity risk assessments and AI chatbots for customer service. However, until AI becomes more accurate, businesses should be wary about using it to make decisions related to people—unless they’ve taken the rigorous steps we’ve outlined to combat bias. Otherwise, it’s too easy for bias to creep in and perpetuate the diversity issues that many organizations already face.

Read next: Using Responsible AI to Push Digital Transformation