Oracle announced that it is making available a GraphPipe protocol for transmitting tensor data used to create artificial intelligence (AI) models over a network.

Available on Oracle’s implementation of a GitHub repository, Vish Abrams, architect for cloud development at Oracle, says GraphPipe makes it simpler to query AI models by providing a consistent method for deploying AI models across clients and servers.

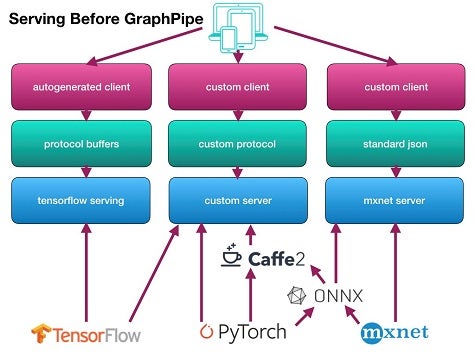

Abrams says the whole process associated with deploying AI models today is overly complex. GraphPipe was developed by Oracle to create a common protocol through which any AI model can be queried without the need to create custom client software for every AI model deployed.

“The communications protocol can now always be the same,” says Abrams.

GraphPipe provides organizations deploying AI models with a set of flatbuffer definitions, guidelines for serving models consistently according to those flatbuffer definitions, examples for serving models from various machine learning frameworks, and client libraries for querying models served via GraphPipe. That approach eliminates the need to rely on inefficient protocols such as JavaScript Object Notation (JSON) formats or more complex protocol buffers that only work within, for example, an AI model built using TensorFlow.

Abrams says GraphPipe also sets the stage to enable IT organizations to apply many DevOps principles created to accelerate application development and deployment to AI models.

As interest in all things AI continues to rapidly increase across the enterprise, vendors such as Oracle are starting to invest more time and energy in making simpler to consume AI models. Just about every enterprise application going forward will be infused with some form of AI. The issue now is making it as simple as possible for enterprise IT organizations to implement those AI models in the most straightforward manner possible.