Many organizations embarking on the journey to DevOps and agile programming look to the performance gains, new product development, lower costs, and a host of other benefits. But log management?

Sure, logs are important, but they are also boring. I mean, really boring. Every time an application does anything, it records it in a log where it can be analyzed to refine operations, tighten security or inform new code changes, among other things. Not exactly a fun night on the town.

But this is precisely why it is such a crucial factor in the DevOps model. With applications now being supported by continuous integration/continuous deployment (CI/CD), the number of logs under management not only scales dramatically, but they must be parsed and analyzed in real time.

In many ways, then, successful DevOps comes down to successful log management.

“The DevOps model requires multiple groups with complementary concerns to look at the same operational data generated by services and applications,” said Tanya Bragin, senior director of product management at software developer Elastic. “DevOps engineers need to understand application behavior either through logs or application code instrumentation, and combine this information with infrastructure metrics. At the same time, product and service owners need to keep track of well-defined KPIs and perform SLA calculations so they can monitor product success and report the status back to the business in real time.”

This challenge only intensifies as the DevOps environment matures and the release cycle speeds up. Not only must log data be available to both development and production, but management systems must be able to handle volume spikes with ease as new services come online to support A/B testing and other workflows.

Obviously, this is going to require some changes to current log management practices and infrastructure. For one thing, DevOps teams will require access to multiple log types in order to visualize the entire project pipeline, which means today’s specialized logging systems will need to be integrated under a centralized platform.

“Most enterprises that enter the DevOps model quickly realize that it’s advantageous to combine logs, metrics and APM data generated by their service on a single platform and expose that data to multiple stakeholders,” Bragin said. “They prefer tools that support a diverse set of user interface options, including ad-hoc data visualization and reporting, to enable both technical staff and service owners.”

In many cases, she added, this is leading to the adoption of open source solutions, which tend to provide greater flexibility and foster higher levels of experimentation at scale without large upfront investments.

As well, highly scaled operations are increasingly turning to standardization and automation for everything from logging and data collection to archiving and storage, particularly once projects start moving in parallel.

“Organizations may create monitoring or observability teams to enable DevOps teams with standardized logging, metrics and APM analytics as a service,” Bragin said. “They standardize libraries for structured logging, metrics and APM instrumentation based on the programming framework they adopt. And they automate deployments and teardown of dedicated observability environments on-demand as new services spin up and down.”

Still, it is important not to become overly reliant on automation to basically set the logging process on auto-pilot. Instead, says Bragin, the enterprise should maintain the ability to continually tweak the system to accommodate new products and workflows.

“These observability environments come with filtered views to enable the right context for their data queries and out-of-the-box monitoring dashboards, but may allow users to widen their views to incorporate data coming from other microservices to enable end-to-end transaction tracing and business-level reporting.”

In other words, automation can handle the rote functions, but it takes a human touch to address the nuances.

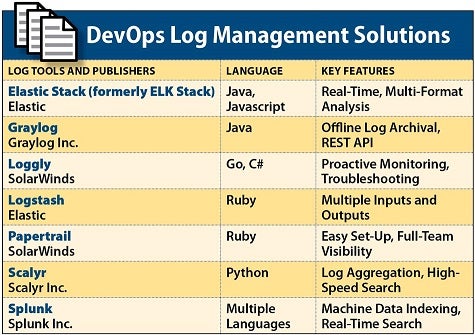

Below are some of the leading log management solutions for DevOps environments:

Elastic Stack

Elastic Stack consists of three open source components: Elasticsearch, Logstash and Kibana. The platform provides an easy means of compiling log data in multiple formats and enabling real-time search, analysis and visualization. In addition to log management, the solution can support security, application performance and other functions.

Graylog

Graylog provides a central platform to collect, process, analyze and visualize log data and provide alerts and action triggering when circumstances warrant. It has a range of enterprise-ready features, such as automated archiving and re-import, user audit log capabilities, LDAP integration and a REST API. It also provides real-time forward data streaming to databases, workstations or any specialized tool.

Loggly

Loggly is a pure-SaaS solution that provides centralized monitoring, analysis, reporting and other functions. It uses open standards to collect and transfer data from any system without the use of proprietary agents and provides easy integration into DevOps tool chains through HTTP endpoints and the system’s own API.

Logstash

Logstash is an open source, server-side data ingestion and procession solution that can pull data from multiple sources and output it to multiple destinations. The system parses events and builds structure around data as it moves from source to source and then unifies it under a common format for easy analysis and visualization. It offers more than 200 plug-ins plus a user-friendly API for customized plug-ins to allow organizations to craft the data pipeline of their choice.

Papertrail

Papertrail is an online log management app that can be set up in less than a minute and provides instant access to thousands of servers. It aggregates app, text and system logs under a single management service featuring real-time tail and search capabilities via browser, command line or API. It also provides instant alerts and continuous detect and analyze functions, along with a permanent archive solution.

Scalyr

Scalyr is a service-based platform that unifies log aggregation, search, analysis and other functions under a single tool. Its core is an event database that acts as a universal repository for all logs, metrics and other operational data. A lightweight agent resides in local servers to transmit logs, metrics and related data to the event database via SSL.

Splunk

Splunk specializes in consolidating and indexing log and machine data to enable real-time search, indexing, visualization and analysis to identify and correction operational and security issues. The platform works with structured, unstructured and multi-line log data, enriching it through access to relational databases, CSV files or other data stores like Hadoop and NoSQL.

Arthur Cole writes about infrastructure for IT Business Edge. Cole has been covering the high-tech media and computing industries for more than 20 years, having served as editor of TV Technology, Video Technology News, Internet News and Multimedia Weekly. His contributions have appeared in Communications Today and Enterprise Networking Planet and as web content for numerous high-tech clients like TwinStrata and Carpathia. Follow Art on Twitter @acole602.